AI Content Personalization for an E-Learning Tech Platform

AI development

Education

2 months

Web

About the project

Our client is a mid-sized EdTech company that offers a digital learning platform for middle and high school students. They had an established LMS with a growing user base but needed to boost student engagement. They wanted to integrate AI-driven content personalization without breaking the current architecture/core infrastructure. They also wanted us to update their UX/UI design for a better user experience.

The client had

A working learning management system

The need for better student engagement and retention

We were responsible for

Implementing AI-based content recommendations

Updating the platform’s UX/UI design

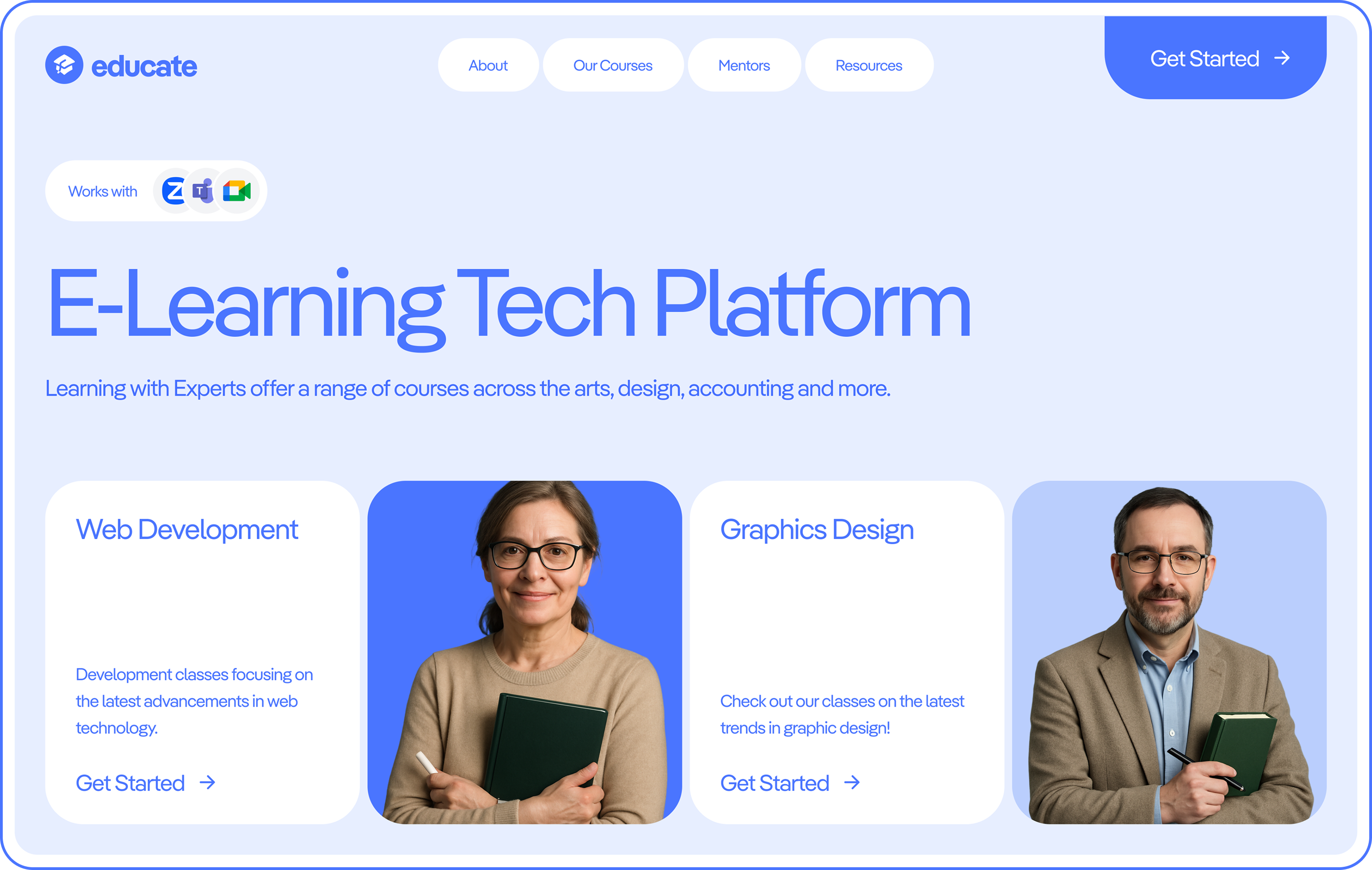

Image

Project team

A dynamic IT team focused on delivering innovative solutions.

Tech Stack

Technologies and tools we used to improve the solution.

OpenAI fine-tuning API

LangChain

Pinecone

Python

FastAPI + Redis

Sentry + Prometheus

Key features we worked on

Key steps we took

Here is how we created the web tool

Discovery phase

Defining personalization objectives and success metrics

Auditing current content structure and evaluating available data sources

Selecting the most suitable LLM based on project requirements and constraints

LLM integration

Fine-tuning the LLM with the help of student behavior data and course metadata

Building prompt templates to generate personalized learning suggestions

Implementing retrieval-augmented generation (RAG) for content-aware responses

Personalized UX implementation

Updating the main page and student dashboards

Including “smart tips,” difficulty adjustments, and optional quiz variants

Enabling teachers to preview and adjust AI-generated content

Backend development

Developing middleware to manage LLM interactions and data flow

Building scalable APIs to deliver personalized content to the LMS in real time

Ensuring backend flexibility to support future AI updates

Quality assurance

Conducting iterative testing with small student and teacher groups

Collecting structured feedback on content, performance, and UX

Refining prompts, interface elements, and model behavior

Deployment and monitoring

Launching in phases across progressively larger student groups

Setting up continuous monitoring for LLM output quality and system performance

Preparing support workflows for edge cases

Project challenges and solutions

How our team dealt with a range of development challenges.

Model hallucinations and irrelevant suggestions

Problem: In some cases, the LLM created content that was factually incorrect and off-topic.

Solution: We used retrieval-augmented generation (RAG) to ground responses in verified curriculum content and included confidence scoring and fallback responses (“Ask your teacher” prompts for low-confidence outputs).

Lack of trust from educators

Problem: Some teachers hesitated to adopt AI-powered features since they felt “out of control” and “didn’t understand how it works.”

Solution: Our team provided transparent override tools (for example, teachers can accept/edit/replace AI recommendations). We also included teachers in testing and feedback loops to gain their trust.

Result

After 6 weeks of monitoring the released LLM-based personalization system, the platform got:

25% increase in student engagement

17% improvement in quiz scores for students receiving adaptive content

Teachers reported less time spent manually assigning remedial content